Optimizing a given metric is a central aspect of most current

AI approaches, yet overemphasizing metrics leads to

manipulation, gaming, a myopic focus on short-term goals,

and other unexpected negative consequences. This poses a

fundamental challenge in the use and development of AI.

We first review how metrics can go wrong in practice and

aspects of how our online environment and current business

practices are exacerbating these failures. We put forward

here an evidence based framework that takes steps toward

mitigating the harms caused by overemphasis of metrics

within AI by: (1) using a slate of metrics to get a fuller and

more nuanced picture, (2) combining metrics with

qualitative accounts, and (3) involving a range of

stakeholders, including those who will be most impacted.

The Problem with Metrics is a Fundamental Problem for AI

Rachel Thomas, David Uminsky

Goodhart’s Law states that “When a measure becomes a target, it ceases to be a good measure.” At their heart, what most current AI approaches do is to optimize metrics. The practice of optimizing metrics is not new nor unique to AI, yet AI can be particularly efficient (even too efficient!) at doing so.

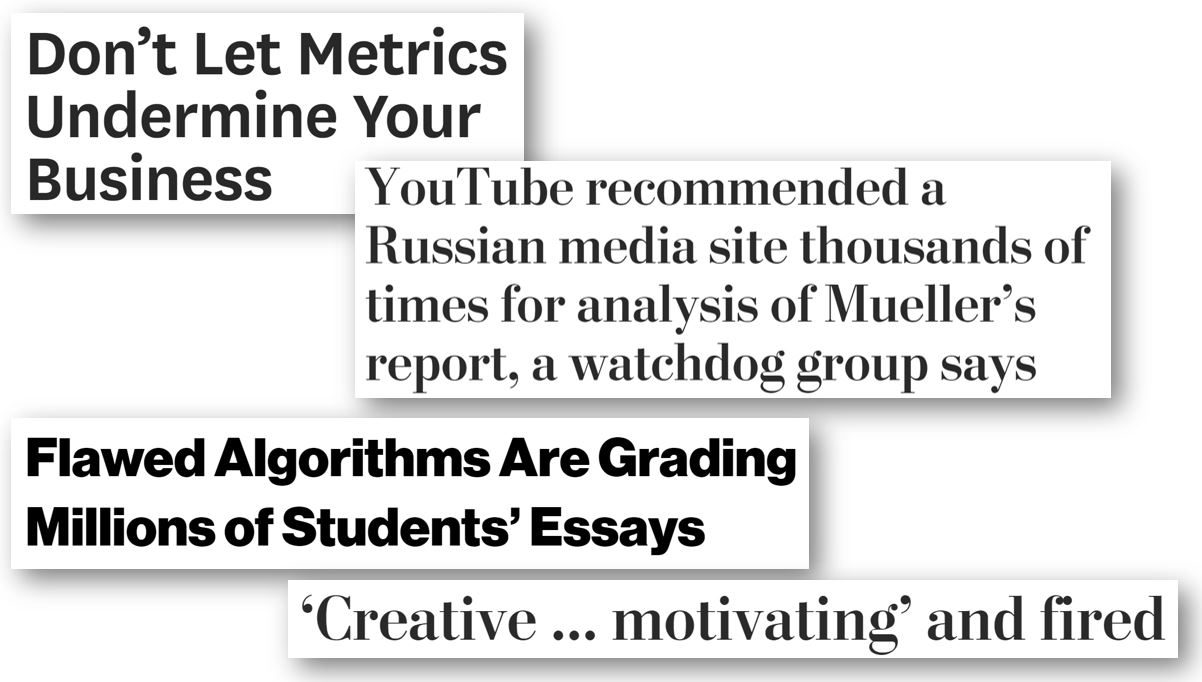

This is important to understand, because any risks of optimizing metrics are heightened by AI. While metrics can be useful in their proper place, there are harms when they are unthinkingly applied. Some of the scariest instances of algorithms run amok (such as Google’s algorithm contributing to radicalizing people into white supremacy, teachers being fired by an algorithm, or essay grading software that rewards sophisticated garbage) all result from over-emphasizing metrics. We have to understand this dynamic in order to understand the urgent risks we are facing due to misuse of AI.

Continua aqui

Nenhum comentário:

Postar um comentário